Automic Automation / Apache Kafka Agent Integration Use Case

In our Apache Kafka environment, a Producer called AppData sends messages that can contain two values, standby or start. When a message with start arrives, a 3rd-party application called TradeAPP should be started. Once TradeAPP has started, another 3rd-party application called PaymentApp should be notified so that it can take further actions. PaymentApp (the Consumer) is listening to messages from the environment and it triggers those actions when a message with the value TradeAPP started arrives.

This use case describes how to configure an Automic Automation Workflow with three Jobs that orchestrates and automates this scenario through the Automic Automation / Apache Kafka Agent integration:

-

The first Job is a Consumer Job that listens to the Topic on Apache Kafka to which AppData writes messages.

-

The second Job is a Windows Job that triggers the execution of TradeApp.

-

The third and last Job is a Producer Job that publishes a message to the Topic on Apache Kafka to which PaymentApp is listening. PaymentApp will take further action only if the message contains the value TradeAPP started.

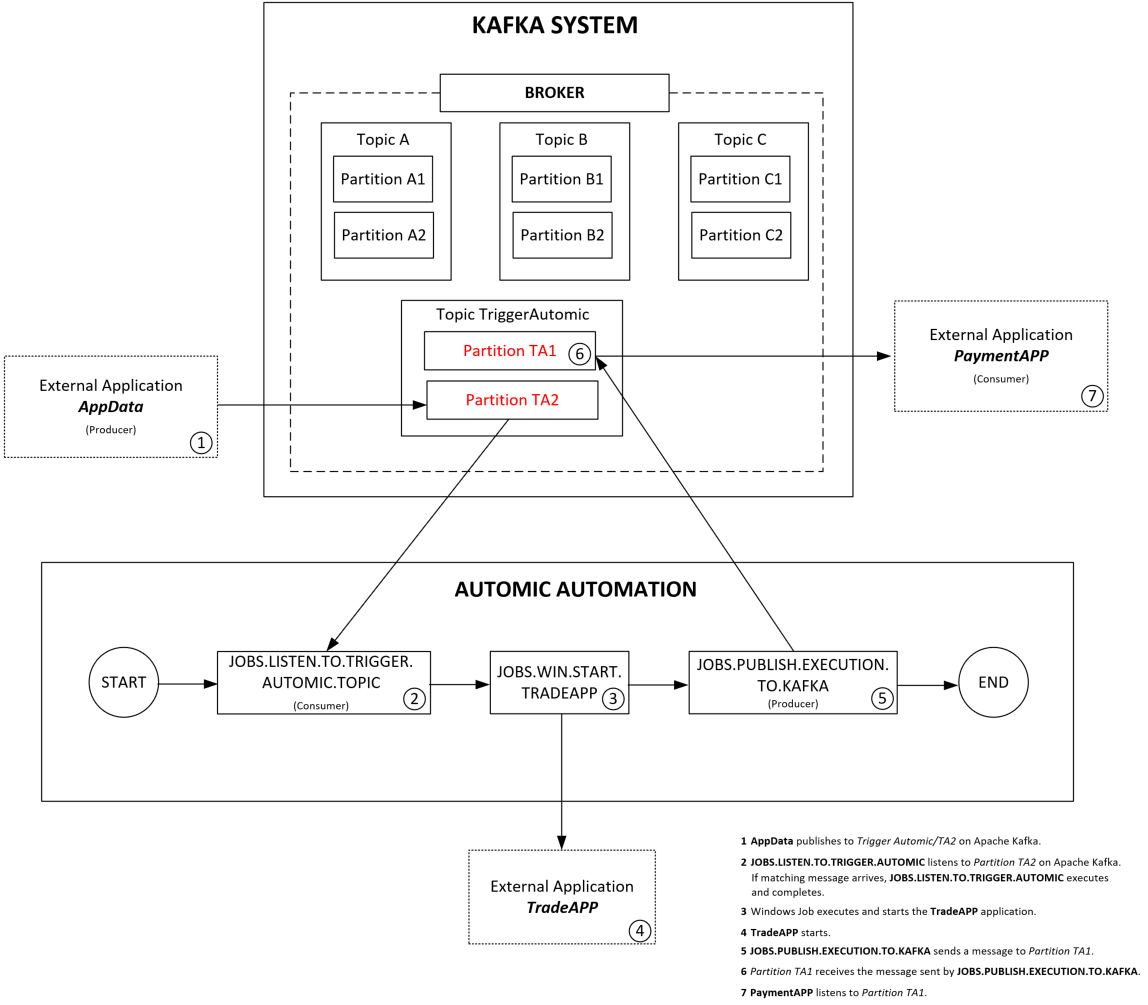

This graphic illustrates our use case:

The Apache Kafka/Automic Automation Use Case Explained

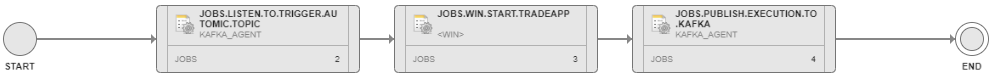

This is our Workflow:

As soon as you execute the Workflow, the first task, namely JOBS.LISTEN.TO.TRIGGER.AUTOMIC.TOPIC will keep running without completing until a message that matches the filter criteria arrives to the TriggerAutomic Topic.

This is how Apache Kafka and Automic Automation integrate in our use case:

-

AppData is the Producer in the Apache Kafka environment. It publishes messages to the TriggerAutomic Topic, to a Partition called Partition TA2. The messages can have two values:

-

standby

When a message with this value arrives, nothing else should happen.

-

start

When a message with this value arrives, TradeAPP should start.

-

-

In our Workflow, the Consumer Job is called JOBS.LISTEN.TO.TRIGGER.AUTOMIC.TOPIC. The Workflow is executing and JOBS.LISTEN.TO.TRIGGER.AUTOMIC.TOPIC is in an Active status until a message with value start arrives.

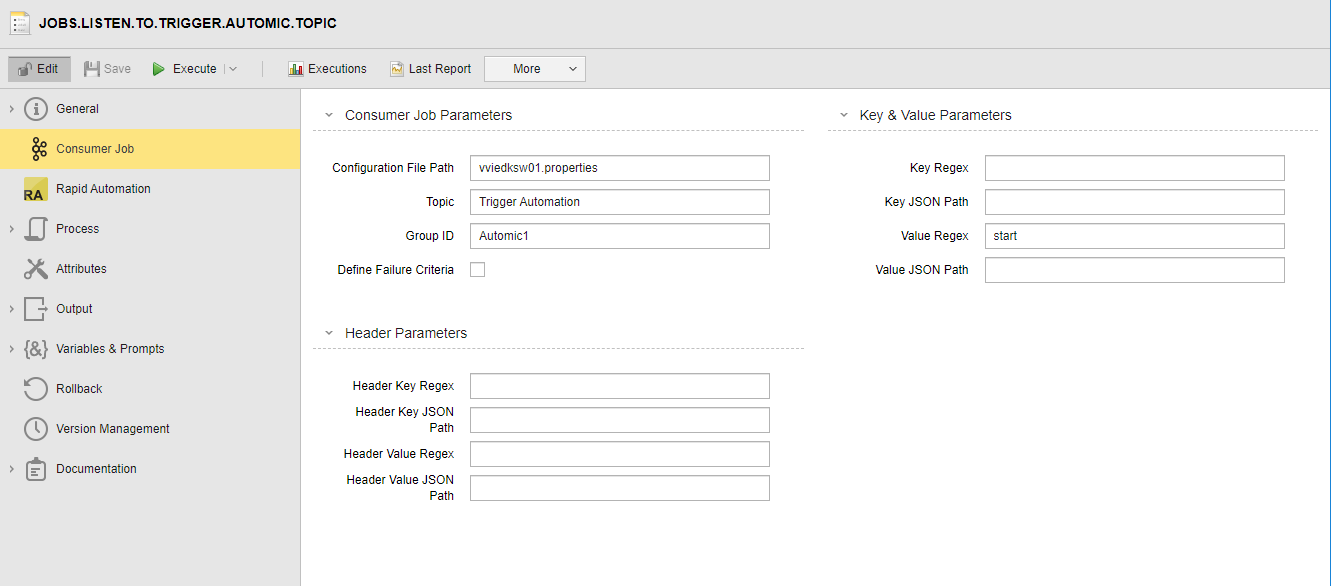

This is what this Job looks like:

The Job contains the parameters that establish the connection to Apache Kafka and that define the filter criteria that messages should meet for any further action to take place. In our use case, we define a simple Job where the following fields are populated:

-

The Configuration File Path, which is the path where the Apache Kafka configuration file is stored on the Agent machine. This file contains the endpoint data to connect to the target Apache Kafka platform, the broker that the Job will listen to, and so on.

By specifying this path we establish the connection to the Apache Kafka platform.

-

Topic within the broker to which the Job listens.

-

Group ID of the Consumer Group to which the Apache Kafka Consumer job belongs. In our case, this is Automic1. For information about Consumer Groups, see Consumer Groups.

-

Value Regex, which in our case is a string: start. This is the message that the Job is expecting.

Such a message arrives, so the Job completes successfully, which means that the next Job in the Workflow starts executing.

-

-

JOBS.WIN.START.TRADEAPP is an Automic Automation Windows Job. It is configured to start TradeAPP.

-

TradeAPP starts. This step occurs outside Automic Automation and Apache Kafka. We mention it here for completeness sake.

-

In our Workflow, as soon as JOBS.WIN.START.TRADEAPP completes, JOBS.PUBLISH.EXECUTION.TO.KAFKA starts. This is a Producer Job that writes messages to the same topic on Apache Kafka as the Consumer Job (TriggerAutomic) but to a different partition ( Partition TA1 in this case). The message contains the value TradeAPP started.

-

On Apache Kafka, Partition TA1 receives the message from Automic Automation.

-

PaymentAPP is the Consumer in the Apache Kafka environment. It listens to messages that arrive to the partitions in the TriggerAutomic topic. In our Workflow, JOBS.PUBLISH.EXECUTION.TO.KAFKA publishes a message to Partition TA1 that contains TradeAPP started. This enables PaymentAPP to trigger further actions outside the Apache Kafka environment.

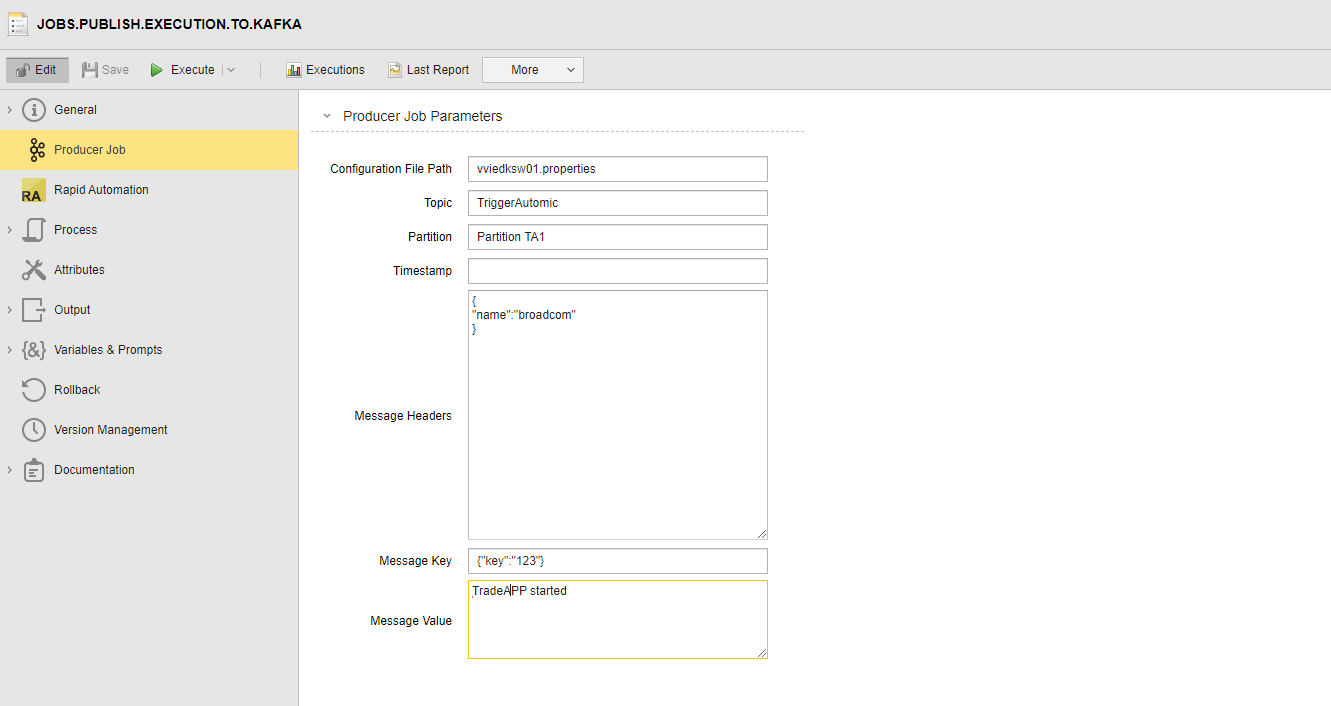

This is what the Producer Job looks like:

-

As in the Consumer Job, the Configuration File Path here is the path where the Apache Kafka configuration file is stored on the Agent machine.

-

Topic within the broker to which this Job will write its message.

-

Message Headers is optional. It contains metadata about the message.

-

Message Key / Value: The value contains the actual message that the Job writes to the topic.

-

See also: