Sizing Requirements for all Analytics components

Analytics Backend and Analytics Datastore

Database systems and database storage have always to be fail safe and redundant. This section does not deal with that question.

|

Modules |

Big Configuration |

High End Configuration |

||||||

|---|---|---|---|---|---|---|---|---|

|

No. |

CPU |

Memory |

Disk |

No. |

CPU |

Memory |

Disk |

|

| Analytics & Datastore | 1 x | 32 Cores | 256 GB | 2 TB | 1 x | 32 Cores | 256+ GB | 4 TB |

|

Number of |

||||||||

|

Concurrent users |

< 200 |

> 200 |

||||||

|

Agents |

< 1 000 |

> 1 000 |

||||||

|

Object definitions |

< 100 000 |

> 100 000 |

||||||

|

Total Executions per day |

< 1 500 000 |

> 1 500 000 |

||||||

|

|

||||||||

| WP | 2 x 16 | 4 x 16 | ||||||

| DWP* | 2 x 45 | 4 x 45 | ||||||

| JWP* | 2 x 10 | 4 x 10 | ||||||

| CP | 2 x 2 | 4 x 4 | ||||||

Sizing and storage recommendations

For medium sized configurations and bigger installations, setting up a regular back-up and truncate for the Analytics Datastore is recommended in order to provide a stable chart performance (for example: back-up and truncate to only keep the last 3-12 months in the Datastore).

See: Datastore Operational Guide

Setup Recommendations

- The UI plugin is always added to the host(s) where AWI is installed.

- Datastore and Backend should be both installed on a dedicated host.

- The Backend must be accessible via HTTP(s) from the AWI hosts and must be able to connect to the Datastore and to all required databases (AE, ARA).

| What disk space is required? | To estimate the disk space required in the Datastore you can calculate with about 1GB for 1.000.000 executions in the Automation Engine |

| Do I need to back up the Datastore? |

The Analytics Datastore was created to store large amounts of data. If you have a medium or bigger installation it might still be useful to remove data older than approx. 1 year from the Analytics Datastore. It is recommended to setup the provided backup actions (ANALYTICS ACTION PACK) |

| General Database Rules |

This is valid for all DB vendors - the log files must/should be placed on the fastest available disks (=SSDs). ORACLE: REDO LOG FILE DESTINATION |

Maximizing Efficiency with the Analytics Datastore

We recommend that you install PostgreSQL 9.6+ with big and high end configurations. This version enables you to benefit from the parallel query feature.

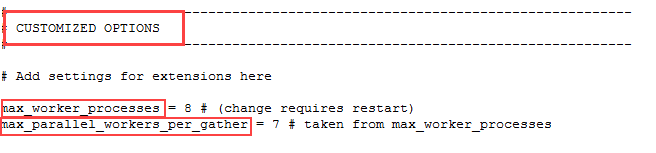

The following 2 parameters need to be set for the postgres installations to enable parallel queries.

These parameters should be set before postgresql is started, otherwise the postgres process should be restarted after parameters have been set.

- max_worker_processes = 8 # default is 8 - value should be set to the amount of cores the dbAdmin decides to be available for the postgresql db

- max_parallel_workers_per_gather = 7 # taken from max_worker_processes, should be set to the value max_worker_processes is set minus 1

The postgresql.conf file us located:

Windows: C:\Program Files\PostgreSQL\9.6\data\postgresql.conf

Linux: /etc/postgresql/9.6/main/postgresql.conf

Example:

A machine with 32 cores running the postgresql, the Analytics Backend an installation this would be a good setting. Reserve 4 cores for the Backend:

- max_worker_processes = 28 #

- max_parallel_workers_per_gather = 27 #

Analytics Rule Engine

Message queue systems and database storage have always to be fail safe and redundant. This section does not deal with that question.

Sizing and storage recommendations

-

IA Agent Nodes

- Refer to existing recommendations for Analytics Backend (above).

- On a single box: 16 Cores for a small sized configuration and 32 cores for a medium sized configuration.

- + 8-16 GB RAM to existing memory recommendations.

-

Streaming Platform Nodes

- 1x4 Cores

- 16 GB RAM

- Disk: Expected event size * expected events per second * how many seconds will be kept in the Streaming Platform (retention period) * replication factor / # of brokers i.e. 80 Bytes * 30000 events per second * 86400 seconds (= 1 day) of retention * 1 (no replication) / 1 (one broker) ~ 210 GB. A single 80 Bytes raw event results in around 3 KB of disk usage in the Streaming Platform.

- Disk buffer cache lives in memory, sufficient RAM required on each broker (depending on how often the Streaming Platform will flush, the more flushes, the less throughput).

-

A single broker can host only a single replica per partition, hence # brokers > # replicas

-

Rule Engine Nodes

- 1x8 Cores

- 32 GB RAM

- Disk: 32 GB

- Memory is critical as the Rule Engine would otherwise start spilling to disk, which will decrease throughput and increase the disk usage on the Streaming Platform as soon as backpressure occurs.

Other Considerations:

- Throughput can be increased by a of factor 5-10 (depending on the batch size) when the Rule Engine, the Automic processes and the Streaming Platform run on their own machines.

- Maximum throughput 1000 concurrent users on a single box, after that backpressure occurs.

- Throughput scales with batch size

- 22.9 GB Streaming Platform logs.dir size for ~ 67m events ~ 3 KByte per event

A single event ingestion using a single box installation is currently limited to ~ 2500 events per second (with one running rule and a trigger rate of 1%). This can be improved by either distributing the services, choosing a higher batch size or using more than one agent for ingestion.

Analytics Streaming Platform

Streaming Platform systems and database storage have always to be fail safe and redundant. This section does not deal with that question.

|

Modules |

Big Configuration |

High End Configuration |

||||||

|---|---|---|---|---|---|---|---|---|

|

No. |

CPU |

Memory |

Disk |

No. |

CPU |

Memory |

Disk |

|

| Streaming Platform | 1 x | 32 Cores | 256 GB | 2 TB | 1 x | 32 Cores | 256+ GB | 4 TB |

|

Number of |

||||||||

|

Concurrent users |

< 200 |

> 200 |

||||||

|

Agents |

< 1 000 |

> 1 000 |

||||||

|

Object definitions |

< 100 000 |

> 100 000 |

||||||

|

Total Executions per day |

< 1 500 000 |

> 1 500 000 |

||||||

Typical Configuration Requirements

The information provided here is given on the basis of per Collector and per Data Category, for stages of typical configurations.

When sharing data with Automic, only the storage required for temporarily storing data to the Streaming Platform and the export to AVRO files is required. Requirements for JSON files have been included in order to estimate the amount of required storage if files were extracted to AVRO and then converted to JSON on the same disk as the Streaming Platform.

|

|

|

|

|

Storage for 1 day data retention |

|

|||

|---|---|---|---|---|---|---|---|---|

|

Collector |

Data Category |

Driver |

Data period |

Storage for Streaming Platform |

Storage for extracting to AVRO files |

Storage for converting AVRO files to JSON files |

Total storage to provision to care for Streaming Platform + AVRO export files + JSON conversion files |

JSON private files |

|

Data Stream "Business automation platform environment" |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Automation Engine Inventory Collector |

AEProcesses |

10 Automation Engine Processes |

12 hours |

0.0031 MB |

0.0030 MB |

0.0060 MB |

0.0121 MB |

0.0061 MB |

|

Automation Engine Inventory Collector |

AEProcesses |

50 Automation Engine Processes |

12 hours |

0.0153 MB |

0.0149 MB |

0.0301 MB |

0.0604 MB |

0.0304 MB |

|

|

|

|

|

|

|

|

|

|

|

Automation Engine Performance Collector |

AEPerfGlobal |

1 Automation Engine |

1 minute |

0.1613 MB |

0.1613 MB |

0.5548 MB |

0.8774 MB |

0.5548 MB |

|

|

|

|

|

|

|

|

|

|

|

Automation Engine Performance Collector |

AEPerfProcesses |

10 Automation Engine Processes |

1 minute |

3.1095 MB |

3.0675 MB |

8.8925 MB |

15.0695 MB |

8.9264 MB |

|

Automation Engine Performance Collector |

AEPerfProcesses |

50 Automation Engine Processes |

1 minute |

15.5476 MB |

15.3375 MB |

44.4623 MB |

75.3473 MB |

44.6320 MB |

|

|

|

|

|

|

|

|

|

|

|

Automation Engine Performance Collector |

AEPerfIssues |

1 issue |

1 minute (*) |

0.2617 MB |

0.2617 MB |

0.3461 MB |

0.8695 MB |

0.3461 MB |

|

|

|

|

|

|

|

|

|

|

|

OS Performance Collector |

OSMetrics |

1 machines |

1 minute |

4.9996 MB |

5.0344 MB |

10.5238 MB |

20.5577 MB |

10.4892 MB |

|

OS Performance Collector |

OSMetrics |

5 machines |

1 minute |

24.9978 MB |

25.1718 MB |

52.6189 MB |

102.7885 MB |

52.4460 MB |

|

|

|

|

|

|

|

|

|

|

|

Data Stream "Workflow environment context" |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

OS Performance Collector |

OSMetrics |

5 machines |

1 minute |

5.2725 MB |

5.3092 MB |

11.0983 MB |

21.6801 MB |

11.0619 MB |

|

OS Performance Collector |

OSMetrics |

100 machines |

1 minute |

105.4503 MB |

106.1844 MB |

221.9664 MB |

433.6010 MB |

221.2372 MB |

|

OS Performance Collector |

OSMetrics |

500 machines |

1 minute |

527.2513 MB |

530.9219 MB |

1109.8320 MB |

2168.0052 MB |

1106.1859 MB |

|

OS Performance Collector |

OSMetrics |

1000 machines |

1 minute |

1054.5026 MB |

1061.8438 MB |

2219.6640 MB |

4336.0105 MB |

2212.3718 MB |

|

*: This is an example, if there is a performance issue every minute (in ideal conditions: no performance issue, no event triggered) |

|

|

|

|

|

|

|

|

Although the amount of data populated in Analytics Streaming Platform is less than 1 GB, The Streaming Platform will consume 1 GB storage regardless.

DCS exports data temporarily stored in Analytics Streaming Platform into AVRO files. The "Extraction to AVRO files" consequently gives information on storage required but also the volume of data sent to Automic.

Configuration examples for the Data Stream "Business automation platform environment"

The following example configurations take into consideration the DCS (Data Collection Service) default collection periods as well as these collectors started:

- Automation Engine Inventory Collector

- Automation Engine Performance Collector

- OS Collector on the Automation Engine machine or machines

The examples given are as if data was shared with Automic on a daily basis with a 4 day data retention period configured.

The JSON file storage figure is also given as an indicator of the data you might use for your internal uses cases.

Small Configuration

- 1 machine for the Automation Engine

- 8 Automation Engine Processes

- OS Collector started on the Automation Engine machine (no Automation Engine performance events)

|

Data |

Storage (MB) with 4 days data retention |

|---|---|

|

Streaming Platform |

34.1408 |

|

Extraction to AVRO files |

34.1116 |

|

Converted JSON files |

81.2928 |

Large Configuration

- 5 machines for the Automation Engine

- 30 Automation Engine Processes

- OS Collector started on the 5 Automation Engine machines (no Automation Engine performance events)

|

Data |

Storage (MB) with 4 days data retention |

|---|---|

|

Streaming Platform |

163.9348 |

|

Extraction to AVRO files |

163.7888 |

|

Converted JSON files |

392.0488 |