Streaming Platform Operational Guide

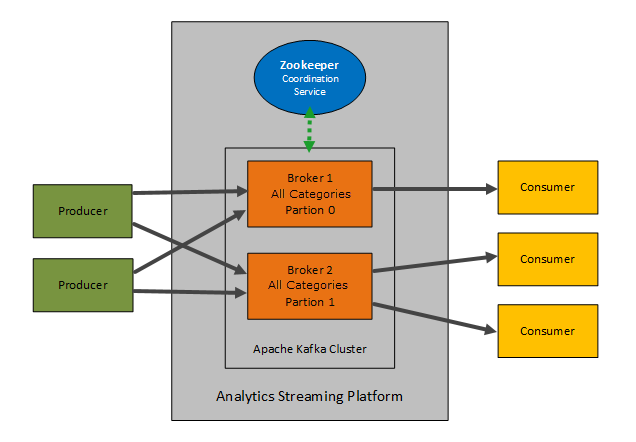

The Analytics Streaming Platform uses Apache Kafka and Zookeeper to deliver a message queue/storage and coordination service for the Event Engine feature. This document is aimed at experienced operational teams that require information about the internal workings of the CA Automic implementation Kafka and Zookeeper.

Important! Note this is not an extensive document to the technologies used to incorporate Analytics Streaming Platform, it outlines the methods used to deploy CA Automic adaptation of Apache Kafka and Zookeeper.

Notes:

- For extensive information regarding the technical details of Kafka see: https://kafka.apache.org

- For extensive information regarding the technical details of Zookeeper see: https://zookeeper.apache.org/

- This document is aimed at experience users of Apache Kafka and Zookeeper, it presumes that you have some basic knowledge of the topics outline below.

This page includes the following:

Architecture

As the Streaming Platform is based on Kafka and Zookeeper the architecture remains common.

How the Streaming Platform Interfaces with Analytics

The interface between Analytics and the Streaming Platform is made through the application.properties file located in following directory: <Automic>/Automation.Platform/Analytics/backend.

Note: All collectors are activated by default.

The Event Engine feature has two topics defined in the Streaming Platform:

- events for Events posted to the REST endpoint in the IA Agent.

- trigger when Rule Engine (Flink) jobs write trigger messages that are then consumed by the IA Agent.

See: Categories (Known as topics in Kafka)

Example of the default Streaming Platform configuration:

# Kafka (Streaming Platform) ####### # Specify Kafka hosts

kafka.bootstrap_servers=localhost:9092 #Kafka consumers and producers #Default Kafka consumer configs can be overridden #*globally: kafka.consumer_configs.default[<setting>]=<value> #*specific: kafka.consumer_configs.<consumer>[<setting>]=<value> # #Default Kafka producer configs can be overridden #*globally: kafka.producer_configs.default[<setting>]=<value> #*specific: kafka.producer_configs.<producer>[<setting>]=<value> # #Consumers/Producers that are used: #*edda_trigger:used to read/write triggers of an Rule Engine rule #*edda_events:used to read/write incoming events that are processed by the Rule Engine #kafka.producer_configs.edda_trigger[acks]=all #kafka.producer_configs.edda_events[acks]=all

#Kafka topics

#

#Configure topic creation properties

#*globally:kafka.topic_configs.default.default.<setting>=<value>

#*per dataspace:kafka.topic_configs.<dataspace>.default.<setting>=<value>

#*per topic:kafka.topic_configs.<dataspace>.<topic>.<setting>=<value>

#

#The following Kafka topic settings are available

#*partitions: number of partitions that should be used for each topic

#*replication_factor:number of replicas per partition

#*rack_aware_mode:spread replicas of one partition across multiple racks (possible values: DISABLED, ENFORCED, SAFE)

#kafka.topic_configs.default.default.partitions=1

#kafka.topic_configs.default.default.replication_factor=1

#kafka.topic_configs.default.default.rack_aware_mode=DISABLED

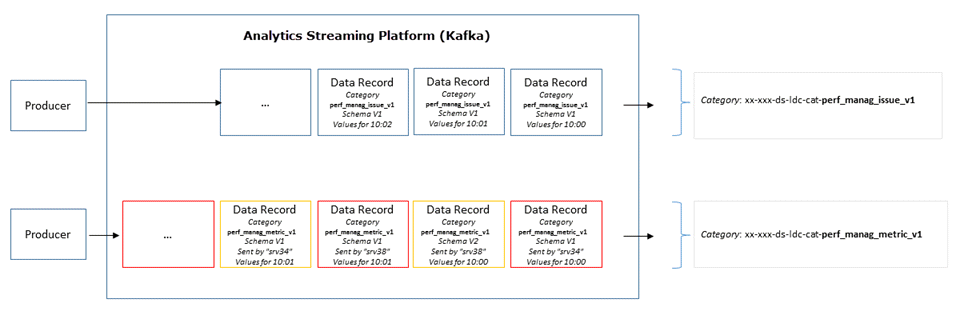

Data Organization (Dataspaces and Categories)

Streaming Platform Naming Convention

The Streaming Platform, data streams are separated and easily identifiable. CA Automic solution is to use identifiers and names and therefore the following format has been adopted:

<uuid>_ds-<dataspaceName>_<name>

-

The application identifier denoted in red, allows for the separation between different application instances sharing the same Streaming Platform installation (example, separation between several Analytics Backend installations or between Analytics Backend and customers' own applications).

The <uuid> (unique identifier) identifies the application instance.

-

The dataspace identifierdenoted in orange, ensures a separation of different producers /consumers in the same application

It is composed of the prefix dataspace, followed by the dataspace name in square brackets .

-

The last section in green is made up of a name item. The Event Engine feature uses the simply a topic name (Example, events or trigger).

Also see: Categories (Known as topics in Kafka)

Dataspaces

Dataspaces are logical organizational structures that help differentiate categories managed by different components.

<Automic>/Automation.Platform/Analytics/backend

Categories (Known as topics in Kafka)

Notes:

- The Event Engine feature uses the generic Kafka term topic and does not use the term Categories.

- Categories are always multi-subscriber; that is, a topic can have zero, one, or many consumers that subscribe to the data written to it.

As previously described the Streaming Platform organizes data into Dataspaces and within each Dataspace there are Categories. A Category in a Dataspace is mapped using a specific set of prefixes.

Note: By default consumers are subscribed to all topics to improve performance.

How Data Flows Through the Streaming Platform

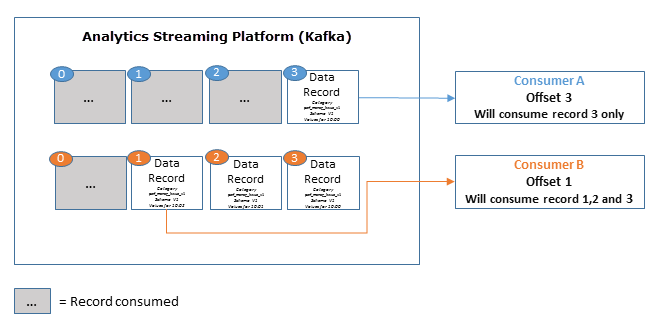

Once a consumer has consumed a producer record, the Streaming Platform offsets and only newer records are consumed by that particular consumer. Any new consumer that subscribes to a producer consumes older records depending on the set retention period.

Parallel with the standard implementation of Kafka, the Streaming Platform retains all published records—whether they have been consumed. This is carried out using the configurable retention period (default period is seven days).

Example:

If the retention policy is set to two days it can be consumed with that time period, thereafter is discarded to free up storage space.